[ad_1]

First what’s it ?

Secondly some docs

Wikipedia web page

We are going to want :

Covariance matrix Matrix inversion Matrix multiplication Vector imply

The matrix and vectors library mql5 has covers all of our wants truly.

Okay so we are going to construct a construction that we will prep as soon as and reuse for so long as the pattern set will not be altering.

What will we imply with pattern set ?

A set of observations with properties .

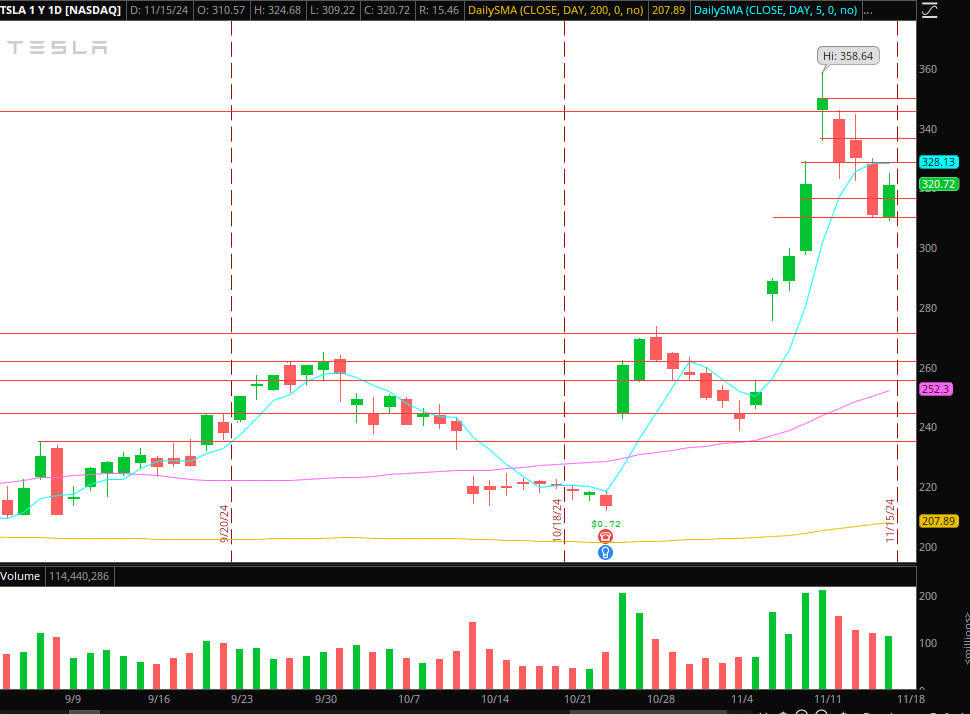

To simplify , shall we say you’ve 10 candles (chart candles) ,and so they have OHLC . So you’ve 10 samples and 4 properties or 4 options.

Right here is an instance utilization

double open[],excessive[],low[],shut[];

ArrayResize(open,10,0);

ArrayResize(excessive,10,0);

ArrayResize(low,10,0);

ArrayResize(shut,10,0);

for(int i=0;i<10;i++){

open[i]=iOpen(_Symbol,_Period,i+1);

excessive[i]=iHigh(_Symbol,_Period,i+1);

low[i]=iLow(_Symbol,_Period,i+1);

shut[i]=iClose(_Symbol,_Period,i+1);

}

mahalanober M;

M.setup(4,10);

M.fill_feature(0,open);

M.fill_feature(1,excessive);

M.fill_feature(2,low);

M.fill_feature(3,shut);

double md=M.distanceOfSampleToDistribution(2);

Print(“Mahalabonis Distance of bar 2 to the distribution “+DoubleToString(md,4));

md=M.distanceOfSampleToSample(5,0);

Print(“Mahalabonis Distance of bar[0] to bar[5] within the distribution “+DoubleToString(md,4));

and right here is the construction

struct mahalanober{

non-public:

vector options[];

bool stuffed[];

vector feature_means;

matrix covariance_matrix_inverse;

int total_features,total_samples;

public:

mahalanober(void){reset();}

~mahalanober(void){reset();}

void reset(){

total_features=0;

total_samples=0;

ArrayFree(options);

ArrayFree(stuffed);

feature_means.Init(0);

covariance_matrix_inverse.Init(0,0);

}

void setup(int _total_features,

int _total_samples){

total_features=_total_features;

total_samples=_total_samples;

ArrayResize(options,total_features,0);

ArrayResize(stuffed,total_features,0);

ArrayFill(stuffed,0,total_features,false);

feature_means.Init(total_features);

for(int i=0;i<ArraySize(options);i++){

options[i].Init(total_samples);

}

}

bool fill_feature(int which_feature_ix,

double &values_across_samples[]){

if(which_feature_ix<ArraySize(options)){

if(ArraySize(values_across_samples)==total_samples){

for(int i=0;i<total_samples;i++){

options[which_feature_ix][i]=values_across_samples[i];

}

feature_means[which_feature_ix]=options[which_feature_ix].Imply();

stuffed[which_feature_ix]=true;

if(all_filled()){

calculate_inverse_covariance_matrix();

}

return(true);

}else{

Print(“MHLNB::fill_feature::Quantity of values doesn’t match whole samples”);

}

}else{

Print(“MHLNB::fill_feature::Function(“+IntegerToString(which_feature_ix)+“) doesn’t exist”);

}

return(false);

}

double distanceOfSampleToDistribution(int which_sample){

if(all_filled()){

if(which_sample<total_samples){

matrix term0;

term0.Init(total_features,1);

for(int i=0;i<total_features;i++){

term0[i][0]=options[i][which_sample]-feature_means[i];

}

matrix term3=term0;

matrix term1;

term1=term0.Transpose();

matrix term2=term1.MatMul(covariance_matrix_inverse);

matrix last_term=term2.MatMul(term3);

return(MathSqrt(last_term[0][0]));

}else{

Print(“MLHNB::distanceOfSampleToDistribution()::Pattern (“+IntegerToString(which_sample)+“) doesn’t exist returning 0.0”);

}

}else{

list_unfilled(“distanceOfSampleToDistribution()”);

}

return(0.0);

}

double distanceOfSampleToSample(int sample_a,int sample_b){

if(all_filled()){

if(sample_a<total_samples){

if(sample_b<total_samples){

matrix term0;

term0.Init(total_features,1);

for(int i=0;i<total_features;i++){

term0[i][0]=options[i][sample_a]-features[i][sample_b];

}

matrix term3=term0;

matrix term1;

term1=term0.Transpose();

matrix term2=term1.MatMul(covariance_matrix_inverse);

matrix last_term=term2.MatMul(term3);

return(MathSqrt(last_term[0][0]));

}else{

Print(“MLHNB::distanceOfSampleToSample()::Pattern (“+IntegerToString(sample_b)+“) doesn’t exist returning 0.0”);

}

}else{

Print(“MLHNB::distanceOfSampleToSample()::Pattern (“+IntegerToString(sample_a)+“) doesn’t exist returning 0.0”);

}

}else{

list_unfilled(“distanceOfSampleToSample()”);

}

return(0.0);

}

non-public:

void calculate_inverse_covariance_matrix(){

matrix samples_by_features;

samples_by_features.Init(total_samples,total_features);

for(int f=0;f<total_features;f++){

for(int s=0;s<total_samples;s++){

samples_by_features[s][f]=options[f][s];

}

}

matrix covariance_matrix=samples_by_features.Cov(false);

covariance_matrix_inverse=covariance_matrix.Inv();

}

bool all_filled(){

if(total_features>0){

for(int i=0;i<total_features;i++){

if(!stuffed[i]){

return(false);

}

}

return(true);

}

return(false);

}

void list_unfilled(string fx){

for(int i=0;i<total_features;i++){

if(!stuffed[i]){

Print(“MLHNB::”+fx+“::Function(“+IntegerToString(i)+“) will not be stuffed!”);

}

}

}

};

In the event you see errors let me know

cheers

[ad_2]

Source link